Building a Deep Learning PC without breaking the bank

Whilst participating in a kaggle competition to identify dog breeds from images of dogs I found myself using an AWS GPU Compute service in order to train the deep neural network, however I quickly found the costs mounting up as i’d often be tweaking the code and paying for the cost of the server even when it wasn’t computing.

I decided to investigate the costs of building a deep learning capable PC that I could use for some entry level kaggle competitions and which could allow me to take my time with the code. A quick search online for such a PC suggests the cost should well exceed $1000, some are upwards of $4000. However, the type of kit you need is entirely dependent upon the problem and since my intention is for learning and to compete in entry level kaggle competitions I think it can be done for much less.

Selecting the Kit

GPU

The most important piece of hardware in a deep learning rig is of course the GPU. I did a lot of reseach and found that the Nvidia GTX 1060 gave the best tradeoff between price and performance. Even better are the GTX 1070/1080Ti but they were a little beyond my budget, so I stuck with the 1060 which I managed to pick up for £209.99 which is a bargain in my opinon.

CPU

The CPU is not quite as important as the GPU so some money can be saved here. I went for an Intel i5 6400 which i sourced from ebay for £85.

RAM

The more RAM the better, but with prices skyrocketting since I last purchased RAM I thought that 16GB is more than sufficient. Of course DDR4 has much faster overclocking capablility than DDR3 so that was a must. I went for a pair of Patriot 8GB 3000Mhz DDR4 modules at a cost of £168.10.

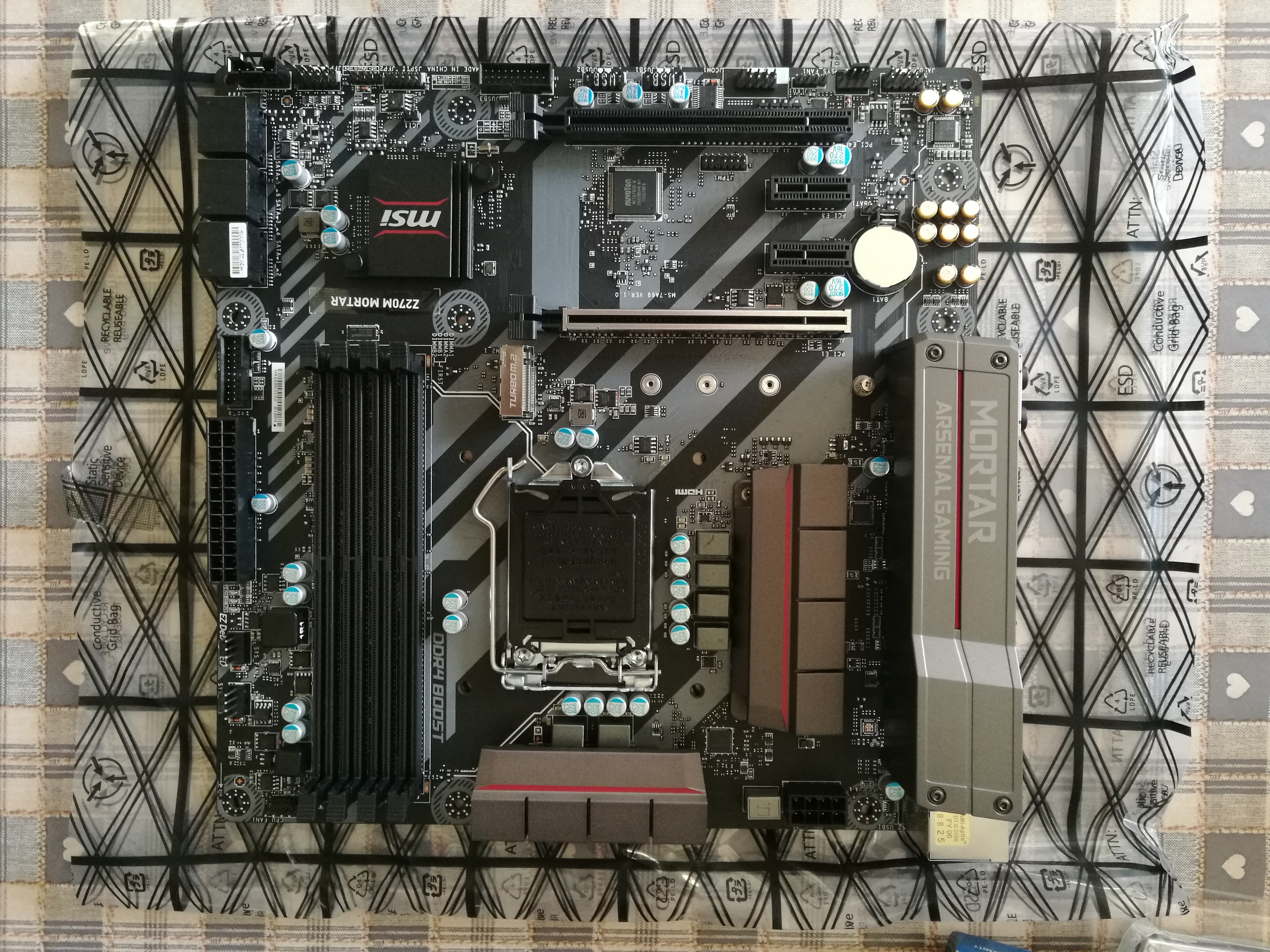

Motherboard

Last but not least the motherboard. In order to achieve the speeds of 3000Mhz with the RAM the motherboard must support the ability to overclock the RAM, which is only available in the Z170/Z270 chipsets for the CPU I have chosen. Therefore I went with an MSI Mortar Micro ATX Z270 motherboard at a cost of £112.98.

Other Items

Other items which are less important and could quite easily be swapped for cheaper versions are the case and power supply. Since I thought I may also use this machine as a cryptocurrency miner I decided to go with a case that could add another graphics card, and more specifically a PSU with a good energy rating (silver)

Total Cost

Below is a list of all the items sourced for the new build

| Item | Cost |

|---|---|

| Nvidia GTX 1060 (6GB) | £209.99 |

| Intel i5 6400 | £85 |

| Heatsink | £15 |

| Patriot 3000Mhz DDR4 | £168.10 |

| MSI Mortar Z270M | £112.98 |

| Be Quiet BN274 600W PSU | £51.83 |

| Fractal Design Define Mini C TG | £72 |

I decied to keep the SSD and HDD from a previous build, so lets add in the approximate cost of an SSD from amazon.

| Item | Cost |

|---|---|

| SanDisk SSD PLUS 240 GB Sata III | £72.99 |

Total Cost £787.89

At the current exchange rate that works out to be about $1050 so we’re not far off the mark.

Build Photos

Deep Learning Tools

After setting up Linux and the basic Nvidia Graphics drivers here is a list of extra tools that we need for deep learning.

CUDA Framework

CUDA is a framework that allows us to make use of Nvidia GPUs for parallel processing, see https://developer.nvidia.com/cuda-zone

Nvidia Docker

Docker containers are like small leightweight VMs that can be loaded with software and deployed and stopped within seconds. Nvidia Docker allows the containers access to the GPU of the host machine https://github.com/NVIDIA/nvidia-docker

Since we’re using keras I found a great Dockerfile and a helpful makefile that means we can deploy a container with all the tools we require for deep learning without having to locate, configure and install all the dependencies.

Once the code is checked out, we simply navigate to the keras/docker folder and create a docker container which should automatically create a jupyter notebook ready to use.

make notebook GPU=0

CPU vs GPU

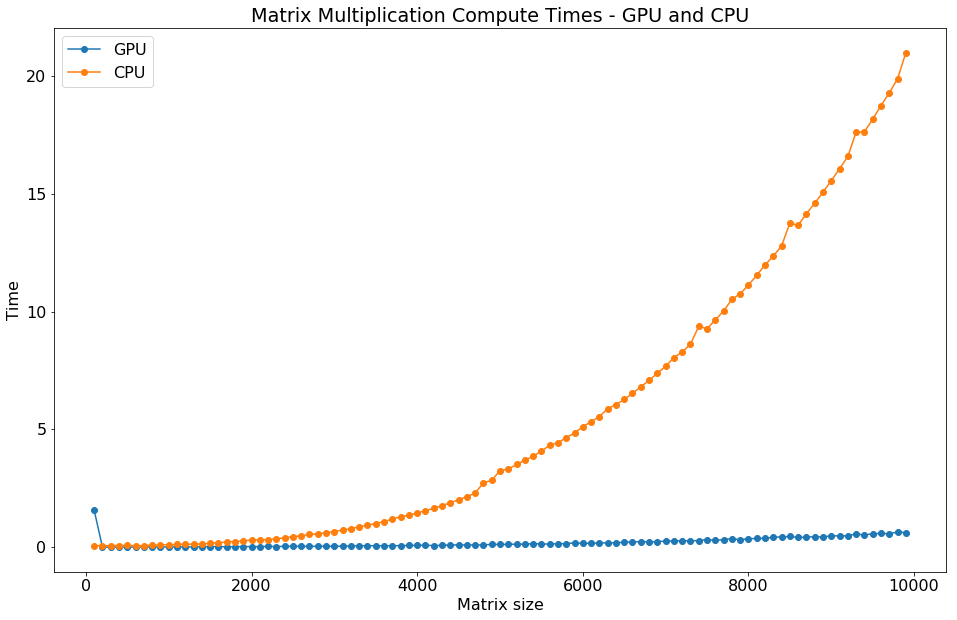

One of the biggest reasons why deep learning has become so popular in recent years is the advancements of GPU hardware. To give us an idea of just how much better GPUs can be for training neural networks let’s compare their performances at a simple matrix multiplcation.

I generated 2 random matrices multiplied them together and timed how long the process took. I incremented the dimension of the matrix by 100 each time up to a 10000x10000 matrix and did this for both the cpu and gpu and plotted the results in a chart.

As you can see from the diagram above the GPU ( orange ) is significantly faster at this problem and the large the size of the matrix the more significant the time savings. Given that this is just 1 matrix and training a neural network might involve hundreds of matrix multiplications you can see how significant using a GPU can be.